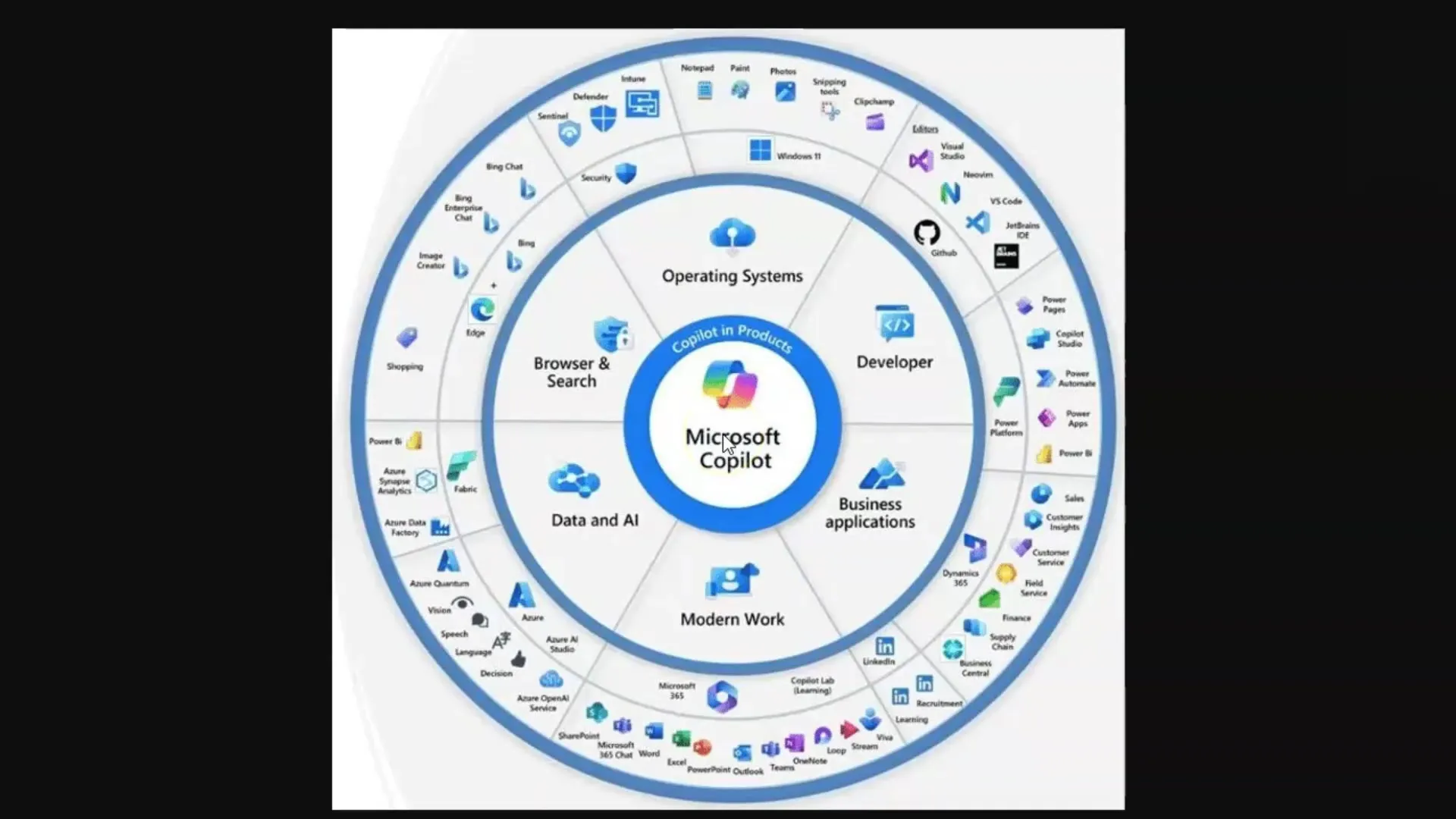

In this tutorial, you will learn how Large Language Models (LLMs) and diffusion models work, especially in the context of Microsoft Copilot. These technologies are crucial for generating text and images used in various artificial intelligence applications. To fully harness the potential of these tools, it is important to understand the fundamental concepts behind them.

Key Takeaways

- Large Language Models (LLMs) generate text based on a large corpus of trained data.

- Diffusion models create images and learn through the processing of image-text pairs.

- The limitation by token constraints is a central concept when working with LLMs.

- Effective prompt engineering is important to obtain high-quality responses from LLMs.

Step-by-Step Guide

Step 1: Basic Understanding of Large Language Models (LLMs)

To understand LLMs, you need to know that they are language models trained on a huge amount of text. These models can answer questions by extracting relevant information from the learned text. Keep in mind that in this context, you are the computer finding information.

You ask a question, and the model searches for the appropriate words in its "memory" consisting of trained data. It is important that you ask the right questions – a concept known as "prompt engineering".

Step 2: Tokens and Their Meaning

An LLM processes text by breaking down words into "tokens," which represent smaller units representing words. For example, on average, a token corresponds to about four letters or three-quarters of a word. These tokens are important because each model can process a certain number of tokens, known as a token limit.

The token limits can vary: For instance, the standard model GPT-3.5 has a limit of 4,000 tokens, while the current model GPT-4 can even work with up to 128,000 tokens. It is important to note that these limitations can affect conversation and the ability to store and retrieve information.

Step 3: Dealing with Token Limitations

Since every language model has a token limit, it is crucial to consider this when working with LLMs. If the limit is exceeded, the model may "forget" what you have been talking about. In this regard, creating summaries or breaking down large texts into bullet points can be helpful to capture the most relevant information.

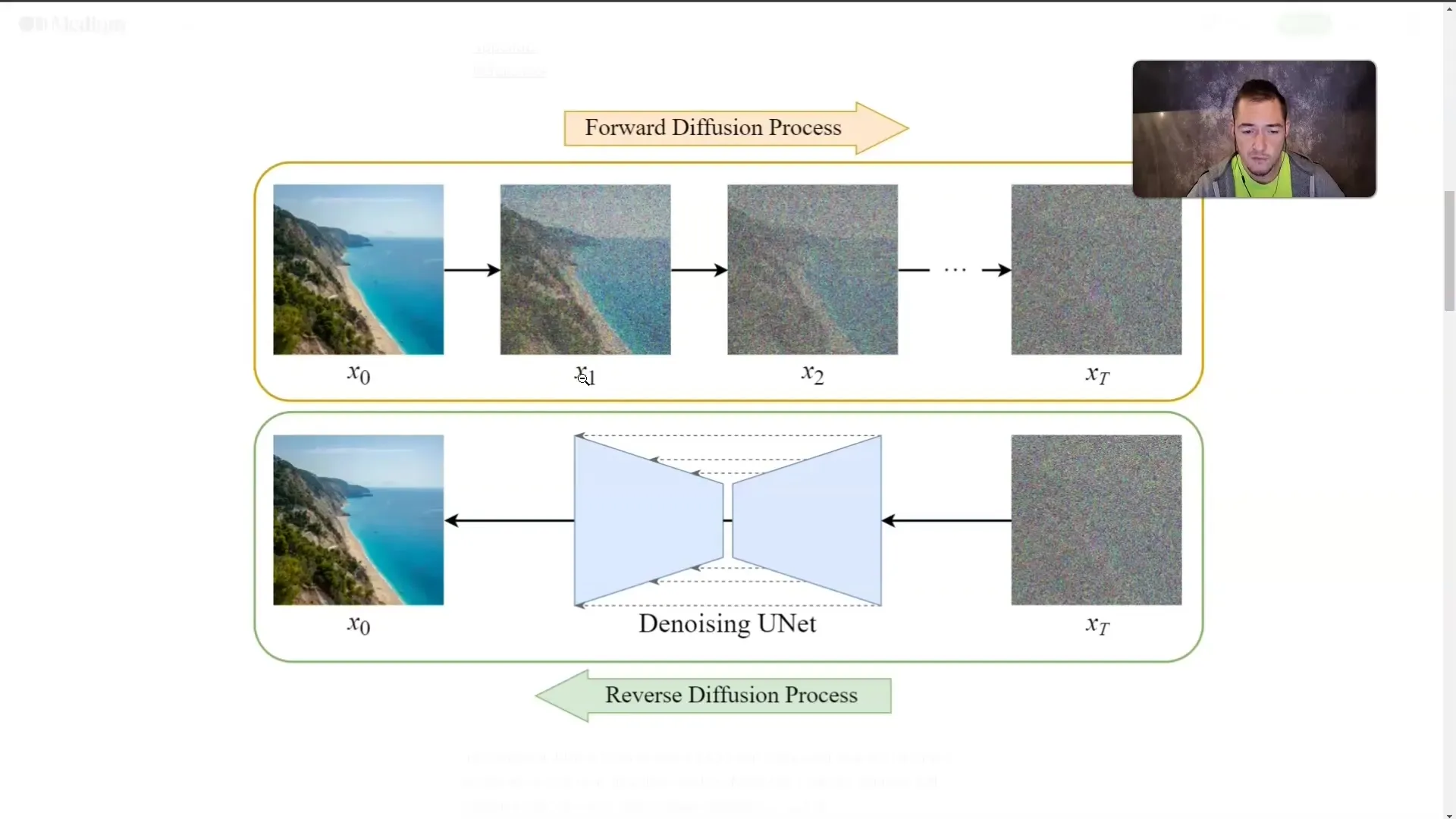

Step 4: Understanding Diffusion Models

In addition to LLMs, diffusion models are also of great importance. These models generate images by being trained with image-text pairs. An image is gradually overlaid with "fog" until it is no longer visible. During training, the model learns how the images look, even if it no longer directly sees them.

This technique allows the model to generate an image from a descriptive text. The more detailed you describe the desired content, the more precisely the model can generate the image.

Step 5: Applying the Concepts

Once you understand how LLMs and diffusion models work, it is important to apply this knowledge practically. When using Microsoft Copilot, always make sure to ask precise and relevant questions to achieve the best results.

Whether you are generating text or creating images, the quality of your inputs will directly impact the quality of the outputs.

Summary

In this tutorial, you have learned the basic concepts of LLMs and diffusion models. You now understand how these technologies work, the role of tokens, and how important prompt engineering is for the quality of results. Understanding these concepts is crucial for effectively working with Microsoft Copilot and similar AI applications.

Frequently Asked Questions

What are Large Language Models?LLMs are language models trained on large texts to generate texts and answer questions.

What are Diffusion Models?Diffusion models are AI models that generate images by gradually "fogging" them and learning what is hidden behind the fog.

Why are Tokens important?Tokens are the smallest units of words processed by LLMs, and each model has a limit on how many tokens it can process simultaneously.

How can I bypass the Token Limit?Some methods include creating summaries or breaking down texts into bullet points.

What is Prompt Engineering?Prompt Engineering refers to the art of asking effective and precise questions to obtain high-quality responses from LLMs.