Large Language Models (LLM) like Google Bard play a significant role in information dissemination and supporting users in various fields. While these technologies deliver impressive results, they also have their limitations and challenges. In this tutorial, you will explore the limitations of Google Bard in more detail. The goal is to develop a critical understanding of how such models work so that you can better evaluate the provided information and potentially question it.

Key Insights

- Google Bard and similar models are not perfect and have errors.

- The outputs can be factually incorrect even if they seem convincing.

- You must always be critical and verify information before sharing it.

Step-by-Step Guide

Understanding the Error Rate

It is important to realize that Google Bard, like many other large language models, is not flawless. You have probably noticed that the quality of the outputs varies. If you feel that the results are unsatisfactory, allow yourself to be critical.

Accepting Incorrect Outputs

Often, the results from Google Bard can be incorrect or even completely wrong. You may have already encountered this in your own experiences in this course. It is crucial to understand that Bard's output is not always reliable.

Questioning the Dissemination of Information

If you have information originating from Google Bard, you should always verify it before spreading. You are responsible for ensuring the correctness of the information. If you are unsure, consult additional sources before sharing anything.

Analyzing Examples of Errors

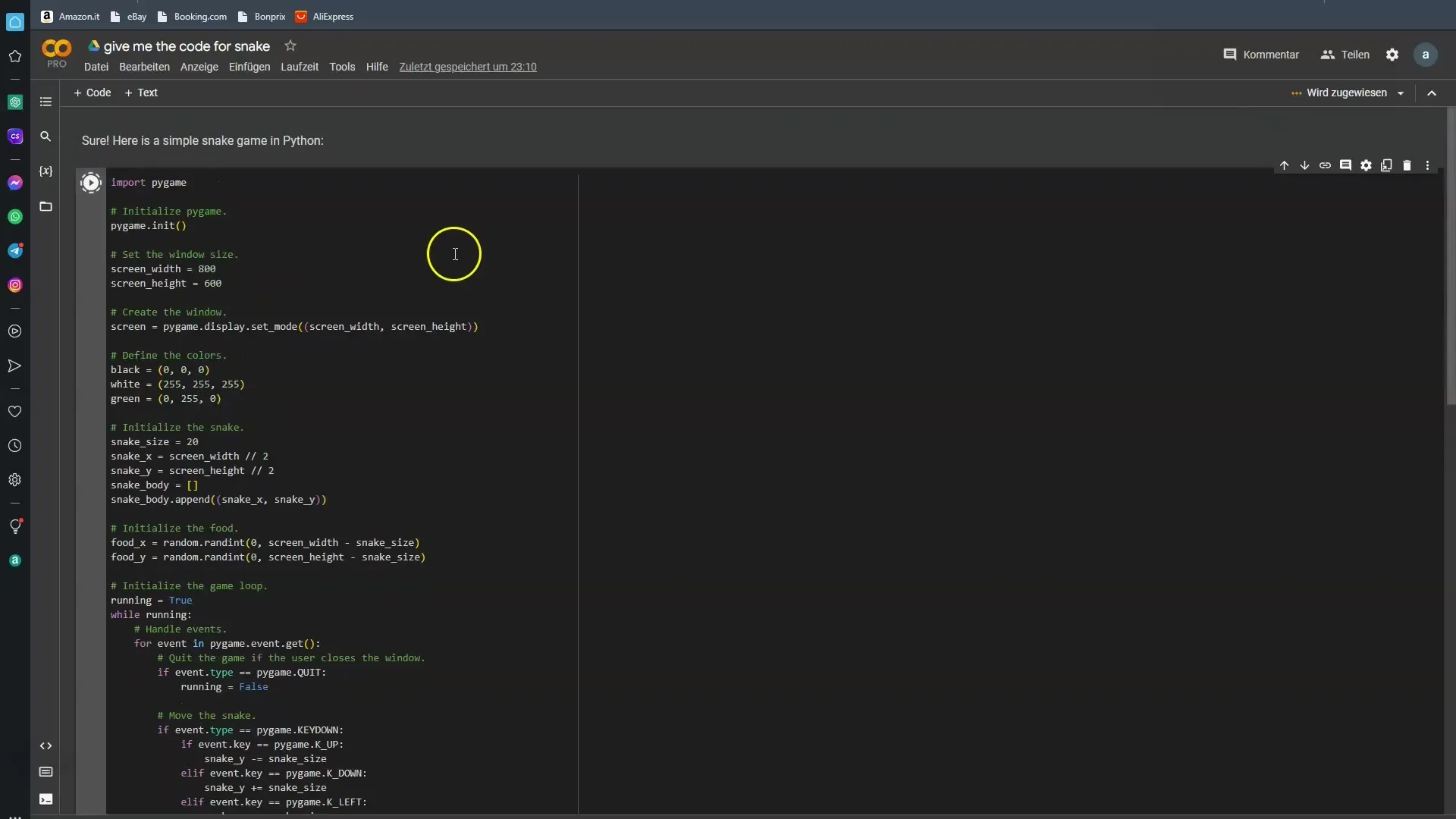

To illustrate the fallibility of Google Bard, it is helpful to look at concrete examples. I asked Bard for a program code for a simple Snake game. In most cases, Bard failed this task. Let's see if we have better luck this time.

Practical Application of the Information

Especially when dealing with technical questions, you should be aware that Google Bard's responses may not always meet your expectations. It is okay to test it under different conditions to check if you get the desired output.

The Necessity of Critical Thinking

Whenever working with AI results, it is essential to switch to critical thinking mode. Google Bard can present you with many things, but not all of it is accurate. The model is trained to learn from vast amounts of data, but this data is not always error-free.

Future Improvements on the Horizon

It is expected that models will continue to improve in the future. With advancing technology, the susceptibility to errors will hopefully decrease. However, it is important to acknowledge the current limitations so that you can consider them when using the system.

Summary

The world of AI-assisted assistance and information is exciting and full of possibilities, but it also carries risks. Google Bard and similar models are a step into the future, but they come with limitations. It is crucial that you recognize these limitations and learn to view information with a critical perspective.

Frequently Asked Questions

What are the main errors of Google Bard?The main errors of Google Bard lie in the inaccuracy of the provided information.

Why should I verify the information provided by Bard?The information provided by Bard can be erroneous and is not always reliable.

Can I use Google Bard for technical questions?Yes, but you should critically question the answers and consult other sources if necessary.

Who trained Google Bard?Google Bard and similar models are trained by humans, which can lead to human errors.

Will Google Bard improve in the future?Yes, it is expected that future versions will be less error-prone.